Definition

Processor consistency (Processor consistency) is a memory consistency model, which means that in a multi-processor, multiple processors simultaneously perform write operation execution results and single processing The result of the write operation of the processor is the same, that is, the result of any execution is the same, just as the operations of all processors are performed in a certain order, and the operations of each microprocessor are performed in the order specified by its program. In other words, all processors see all modifications in the same order. The read operation may not be able to get the previous write update of the same data by other processors in time. However, the order of the different values of the data read by each processor is the same. Processor consistency generally occurs in parallel processing and parallel computing.

Memory consistency model

The consistency model is essentially a contract between software and memory. If the software complies with the agreed rules, the memory can work normally; otherwise, the memory cannot guarantee the correctness of operation. The memory consistency model describes the correctness of memory operations during program execution. Memory operations include read operations and write operations. Each operation can be defined at two points in time: Invoke and Response. Under the assumption that there is no pipeline (that is, the execution of instructions in a single processor is executed in sequence), suppose there are a total of processors in the system, and each processor can issue

Parallel Processing and Parallel Computing

Parallel Processing is a computing method that can perform two or more processes simultaneously in a computer system. Parallel processing can work on different aspects of the same program at the same time. The main purpose of parallel processing is to save time in solving large and complex problems. In order to use parallel processing, you first need to parallelize the program, that is to say, allocate each part of the work to different processing processes (threads). Parallel processing cannot be realized automatically because of interrelated problems. In addition, parallelism cannot guarantee acceleration. In theory, the execution speed of n parallel processing may be n times the speed of execution on a single processor.

Parallel computing generally refers to a computing mode in which many instructions can be performed at the same time. Under the premise of simultaneous progress, the calculation process can be broken down into small parts, and then solved in a concurrent manner.

Computer software can be divided into several calculation steps to run. In order to solve a particular problem, the software uses a certain algorithm to complete it by running a series of instructions. Traditionally, these instructions are sent to a single central processing unit, which runs in a sequential manner. In this processing method, only a single instruction is executed in a single time (processor level: compare microprocessor, CISC, and RISC, the concept of pipeline, and later on the basis of Pipeline for the purpose of improving the efficiency of instruction processing Hardware and software development, such as branch-prediction, such as forwarding, such as the instruction stack in front of each arithmetic unit, the assembly programmer rewrites the order of the programm code). Parallel operation uses multiple arithmetic units to run at the same time to solve the problem. Compared with serial computing, parallel computing can be divided into time parallel and space parallel. Time parallelism is the pipeline technology. Space parallelism uses multiple processors to perform concurrent calculations. The current research is mainly about spatial parallelism. From the perspective of program and algorithm designers, parallel computing can be divided into data parallelism and task parallelism. Data parallelization breaks down a large task into several identical subtasks, which is easier to process than task parallelism. [ MIMD), and the commonly used serial machine is also called Single Instruction Stream Single Data Stream (SISD). MIMD machines can be divided into five common categories: Parallel Vector Processing Machine (PVP), Symmetric Multiprocessing Machine (SMP), Massively Parallel Processing Machine (MPP), Workstation Cluster (COW), Distributed Shared Storage Processor (DSM).

Multiprocessor

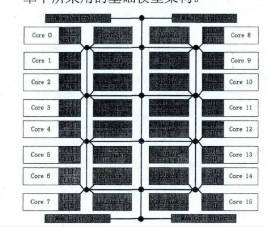

With more than one processing unit, sharing the same main memory and peripheral devices, it can allow multiple programs to execute at the same time. This kind of computer hardware architecture is called a multiprocessor, which can provide multiprocessing capabilities.

The architecture of the multiprocessor is composed of several independent computers, each of which can execute its own program independently. In a multi-processor system, the processor and the processor are connected through an interconnection network, so as to realize the data exchange and synchronization between the programs. Multiprocessors belong to MIMD computers, which are quite different from array processors belonging to SIMD computers. The essential difference lies in the different levels of parallelism: multiprocessors need to achieve task or job level parallelism, while array processors Only realize the parallelism at the instruction level.

The multi-processor system consists of multiple independent processors, each of which can execute its own program independently. There are multiple classification methods for multiprocessor systems.

According to the closeness of the physical connection between the machines of the multiprocessor and the strength of the interaction capability, the multiprocessor can be divided into two categories: tightly coupled systems and loosely coupled systems. In a tightly coupled multiprocessor system, the frequency band of the physical connection between the processors is relatively high. Generally, the interconnection is realized through a bus or a high-speed switch, and the main memory can be shared. Due to the high information transmission rate, jobs can be processed in parallel quickly Or task. The loosely coupled multiprocessor system is composed of multiple independent computers. Generally, the interconnection between processors is realized through channels or communication lines, and external storage equipment can be shared. The interaction between the machines is based on the lower frequency band in the file or data set. On the first level.

According to whether the processor structure is the same or not, if each processor is of the same type and performs the same function, it is called a homogeneous multi-processor system. If a multiprocessor is composed of multiple processors of different types and responsible for different functions, it is called a heterogeneous multiprocessor system.